Regulate AI's Autonomy, not its Adaptability.

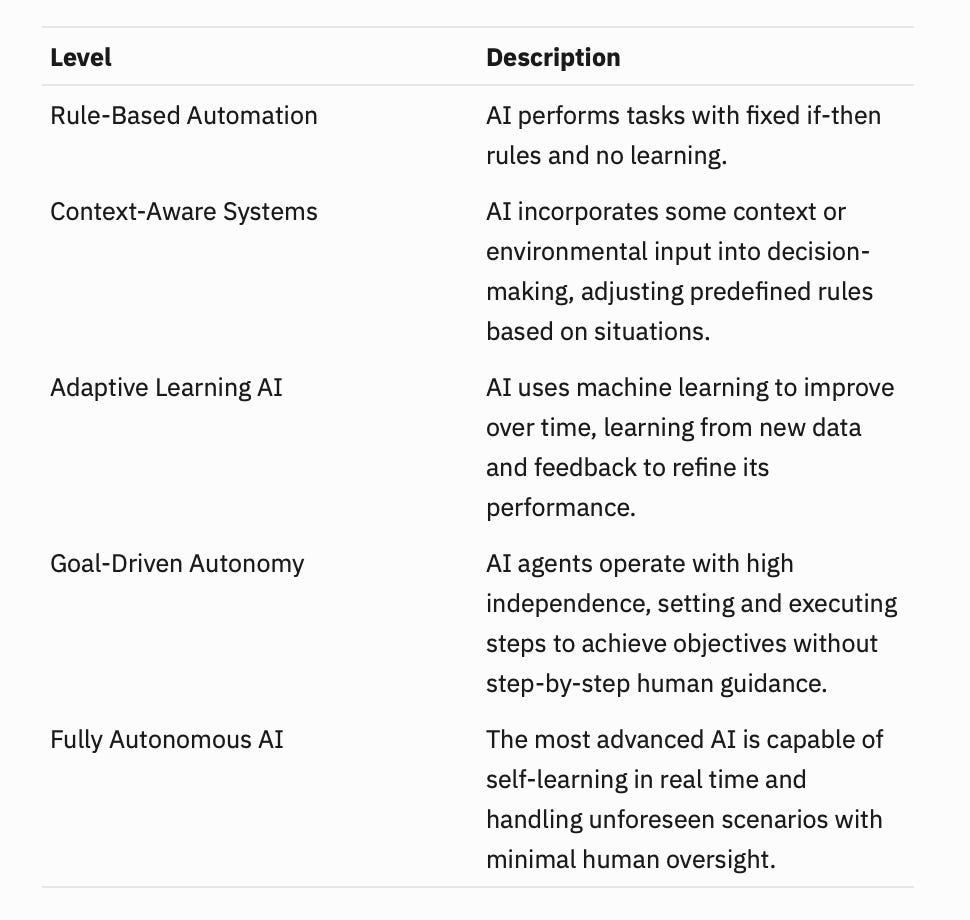

In my last post on AI’s on the job training, I suggested that AI's skills and capabilities would emerge in stages, from simple rule-based processes to, perhaps one day, autonomy …

However, I did not deal with the complicating factor of regulation, which is increasingly becoming reality for developers around the world.

Many in the US have been critical of the EU AI Act as being too restrictive. However, proposed regulations in Texas (where both Oracle and Musk's companies are headquartered) could be even more stringent.

In short, regulation is coming, one way or another, and we need to think carefully about what its effects might be.

Regulating for vulnerabilities

I have written at some length here and here about a model of regulation that could consider vulnerabilities (who or what might be impacted by AI, no matter what the model or capability) rather than risks (what could go wrong given any specific model.)

In a vulnerability-focused model, it would make more sense to regulate autonomy than any other feature of an AI.

Autonomy = Decision-Making Power: Regulating how AI is deployed (e.g., in hiring or healthcare) targets real-world consequences, not opaque algorithms.

Complexity ≠ Accountability: A model’s adaptability or complexity is hard to legislate. Autonomy, however, dictates its authority—making it a clearer regulatory target.

Protecting Vulnerabilities: Marginalized groups face disproportionate harm when AI operates unchecked. Autonomy limits in high-stakes areas (e.g., welfare allocation) could enforce ethical guardrails.

Thus, restricting AI autonomy in some way (e.g., requiring human oversight for AI-driven decisions affecting vulnerable groups) seems like a reasonable and pragmatic approach to ensure fairness, accountability, and ethical safeguards while still allowing AI’s benefits to be realized.

But I cannot pretend that it would have no effect on AI development. In fact, I expect the evolution of AI would look rather like this:

If autonomy is restricted, AI’s evolution might look like this:

Autonomy Plateaus (the red line): Human oversight caps AI’s decision-making independence in critical domains.

Adaptability Grows (the blue line): AI optimizes within regulatory constraints (e.g., refining medical diagnoses under clinician review).

Complexity Surges (the putple line): Compliance layers (audits, explainability tools) inflate system intricacy.

There could be potential downsides to this approach, besides the effect on AI development.

Reinforcing Human Bias

Ironically, mandating human oversight might reintroduce biases that AI could mitigate. For instance:

An AI judge programmed to ignore demographics might suggest unbiased sentences, but human reviewers—subject to implicit biases—could override them.

Studies reveal automation bias, where humans overly trust AI outputs (Green, 2022). Conversely, distrust might lead to arbitrary overrides.

False Security in "Human-in-the-Loop" Systems

Requiring human reviewers can result in complacency. Developers might focus less on fixing the flaws models, assuming that humans in the loop (or over the loop) will catch any errors. The result being that both AI and human weaknesses compound potential harms.

Regulatory Arbitrage

Firms may relocate to jurisdictions with more favorable laws. For example, a company might choose a location not for its less strict rules but because the governance model suits its business model.

Balancing it out

One path forward is an adaptive approach that protects vulnerabilities without stifling innovation. We might structure this around a couple of strategies:

Tiered Autonomy Controls

Match regulatory strictness to the context’s vulnerability:

Low Stakes (e.g., movie recommendations): Minimal oversight, allowing innovation.

High Stakes (e.g., medical diagnostics): Mandate human review, transparency, and rigorous testing.

Europe’s current AI ethics guidelines imply this calibrated approach: “All other things being equal, the less oversight a human can exercise over an AI system, the more extensive testing and stricter governance is required.” but it's not quire there. A better formulation would be: “All other things being equal, the more vulnerable to users or systems affected by an AI deployment are, the more human oversight is required”

Concretely, regulators could define tiers of AI autonomy just like the stages I described earlier, from rule-based systems to full unsupervised autonomy.

Adaptive Governance

Dynamic regulations would update rules as AI capabilities and society's needs change over time. A vulnerability-oriented AI governance model could find this balance by being context-aware. It might explicitly ask, “Who could be hurt by this AI and how do we shield them?” which aligns with fundamental principles of law in many jurisdictions.

Target Hardening: Whether it’s a type of decision, a demographic at risk, or a security flaw in the tech, attempt to protect what is most likely to break or cause injustice, rather than broadly limiting everything.

The principle is that as the sensitivity of the context increases, the allowable autonomy decreases.

Regulating AI autonomy with a vulnerability-based focus offers a path beyond one-dimensional risk calculations and instead asks how we can reinforce the fragile links in the chain of AI deployment. By doing so, we may find a governance model that is vigilant about harm, adaptive to change, and ultimately aligned with society's values and interests.

In my next and last post on this topic, I will look at what Jensen Huang’s HR department of the future - managing AI agents - might look like.