Why is AI so bad at drawing hands?

... And why that's a problem

Why can’t AI art generators draw hands? Because AIs draw what hands look like, but they don’t know how hands work. Why can’t untrained humans draw hands? Because we do know how hands work, which hinders us drawing what they look like.

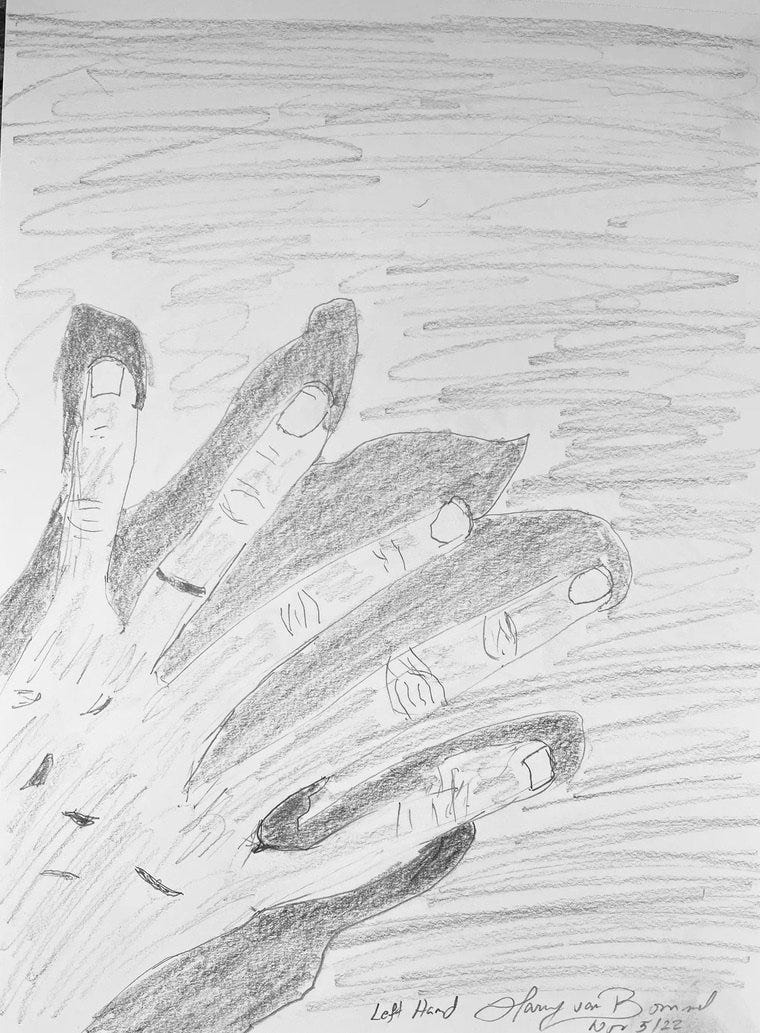

Check out the examples below, one generated by the MidJourney AI and one from a student of the renowned drawing teacher Betty Edwards before her classes.

What’s going on here?

Humans first …

Dr Edwards called her famous book Drawing on the Right Side of the Brain, because she believes that we have to overcome our left-brain-mode, analytic understanding of the world - how a hand and fingers work, for example - in order to unlock a right-brain-mode that is visual and perceives the world as it shows up for us. We have to un-learn, or at least stop fretting, about how hands work and instead see them: their edges, spaces, lines, light and shadows. When we learn to see and draw those visual attributes, rather than the mental schema of what a hand should be, then our drawing improves.

Here’s another drawing by the same student after one day of Dr Edwards’ training.

Learning to see not the hand as a concept but the shapes, lines and shades enables us to create a better likeness, but … we still know how hands work.

So now to our AI applications. Surely they are creating images based on what they see, so they should be drawing hands properly? But look again at the AI images above. They are beautifully rendered in light and shade. But as Gary Marcus describes they are only creating a visual pastiche - a mannered imitation of what they think a human being is looking for when asking for a particular image in a particular style. They don’t understand the anatomy of fingers (or the mechanics of chopsticks for that matter) and they are not drawing a representation of what they see. They are just making up a more-or-less convincing imitation of what they think we expect to see.

The danger of pastiche code

The problem with these AI applications - whether generating art or chat - is that it’s all pastiche without knowledge behind it. Here’s a knitting pattern I asked the davinci-003 model to write:

To a knitter like me, this is very nearly right but with one big mistake. If you’re knitting K2,P2 (a repeating pattern of 4 stitches) then you must cast on a multiple of 4 stitches. But the model does not know that.

Now this worries me, not because your knitted scarf would be screwy, but because a knitting pattern is in many ways an algorithm. It reads like pseudo-code, doesn’t it?

We could easily to make two errors here. You may simply overlook the 46 stitches and carry on, finding the error only when things goes wrong. Perhaps more seriously you may be fooled into thinking the AI actually understands knitting and then be tempted to use it in cases which are more difficult to debug.

Code which is just a pastiche of what good code should look like could be disastrous. In my own experience, the more complex code becomes, the more difficult it is for test and QA to understand the intent of the programmer and from there to work out if the code is working correctly. Not just if the code is valid, but whether it achieves its intent.

A pastiche of good code could include bugs we may never find, especially if (unlike the knitting pattern) they don’t actually raise an error.

More on this in the future, including the nature of any knowledge AI may have. Can an AI have justified true belief? I am thinking about Gettier cases in epistemology or the similar work of Dharmottara regarding pramana.

I may be more worried about code that just happens to work out in most cases, but is so convincing that we do not properly validate it.